WellEZ Connection

Python Code

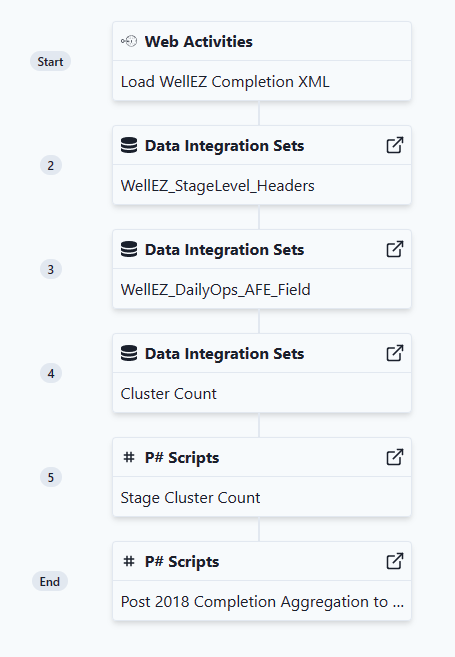

WellEZ sends its data to an SFTP in the form of a zip file (only if the client is sending an xml file.) In order to read the data, the file needs to be unzipped and then connected to PetroVisor.

An Azure pipeline gets the file and unzips it. A Python code cleans it and returns it back to the SFTP server.

This code cleans the data and returns it back to the SFTP server as a CSV. A simple workflow in PetroVisor runs this external activity and the data integration needed.

In order to understand how to modify the code for the client, understand that the code looks for the required user defined field.

def transform_xml_UserDefinedItem_10(xml_doc):

attr = xml_doc.attrib

for xml in xml_doc.findall("./UserDefinedItem_10/"):

dict = attr.copy()

dict.update(xml.attrib)

yield dict

Notice in the above, the "UserDefinedItem_10" is the item in the Excel File that needs to be associated.

In order to reuse this code in other Workspaces, the "User Defined" information in the table and the transform need to be updated for each client. WellEZ should have a client dictionary that has each field defined.

The UserDefinedItem_10 is put into a data frame. This step passes the data through the code and cleans the data. It also lists all the signals needed to be brought into the platform. All the signals that need to be brough into the platform need to be defined here.

#static numeric table

trans_userdefineditem10 = transform_xml_UserDefinedItem10(myroot)

trans_wellinfo = wellinfo(myroot)

df_userdefineditem10 = pd.DataFrame(list(trans_userdefineditem10))

df_userdefineditem10 = df_userdefineditem10.dropna(subset = ['PickList_1']).reset_index(drop=True)

df_wellinfo = pd.DataFrame(list(trans_wellinfo))

df = pd.merge (df_userdefineditem10,df_wellinfo, on='well_id', how ='inner')

df['entity'] = "Stg " + df["PickList_1"] + " " + df["WellName"]

first_column = df.pop('entity')

df.insert(0, 'entity', first_column)

df = df.dropna(subset=['entity'])

#static_columns = ['entity', 'report_Date', 'UserDefined_1_x','PickList_6','PickList_4','PickList_3','PickList_2','PickList_10','PickList_1']

#df = df.drop(columns = [col for col in df if col not in static_columns])

df= df.rename({'report_Date' : 'Report date',

'UserDefined_1' : 'formation',

'PickList_6':'proppant type 4',

'PickList_4' : 'proppant type 3',

'PickList_3' : 'proppant type 2',

'PickList_2' : 'proppant type 1',

'PickList_10' : 'treatment fluid type',

'PickList_1' : 'stage number',

'UserDefined_9' : 'max treat pressure',

'UserDefined_8':'max treat rate',

'UserDefined_7' : 'average treat pressure',

'UserDefined_6' : 'average treat rate',

'UserDefined_5' : 'breakdown',

'UserDefined_43' : 'Est. Stage cost',

'UserDefined_4' : 'breakdown pressure',

'UserDefined_3' : 'breakdown rate',

'UserDefined_29' : 'min treat pressure',

'UserDefined_25' : 'proppant amount type 4',

'UserDefined_24' : 'flush',

'UserDefined_21' : '% produced water',

'UserDefined_20' : '% fresh water',

'UserDefined_2' : 'initial ISIP',

'UserDefined_19' : 'stage slurry',

'UserDefined_18' : 'total acid',

'UserDefined_17' : 'proppant amount type 3',

'UserDefined_16' : 'proppant amount type 2',

'UserDefined_15' : 'proppant amount type 1',

'UserDefined_14' : 'frac gradient',

'UserDefined_12' : 'max concentration',

'UserDefined_11' : 'stage clean',

'UserDefined_10' : 'final ISIP',

},axis = 1)

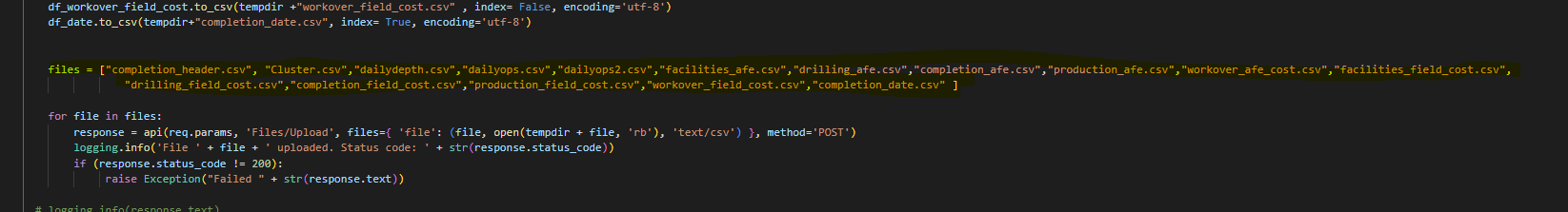

After it pulls all the data, the code then converts it back into a CSV file in the SFTP server.

#generating csv files

tempdir = tempfile.gettempdir()

df.to_csv(tempdir + "completion_header.csv", index= False, encoding='utf-8')

After the file is converted to CSV, we list the name of the file in the "files" list (highlighted in yellow), so we can upload it back to SFTP server.

Working in PetroVisor

Use the Workflow "WellEz."